In cloud platform scenarios, the API is accessible via a gateway, which it is often used to protect the access to internal APIs by enforcing security policies like CORS and IP whitelisting. A very common use case is illustrated below:

On this diagram, we have an SPA application hosted in the app.ozkary.com domain. The app needs to integrate with an internal API that is not available via a public domain. To enable the access to the API, a gateway is used to accept inbound public traffic. This gateway is hosted on a different domain name, api.services.com. Right away, we can expect to have a cross domain problem, which we have to resolve. On the gateway, we can apply policies to allow an inbound request to reach the internal API.

To show a practical example, we need to first review what is CORS and why it is important for security purposes. We can then talk about why we should use an API gateway and how to configure policies to protect an API.

What is CORS?

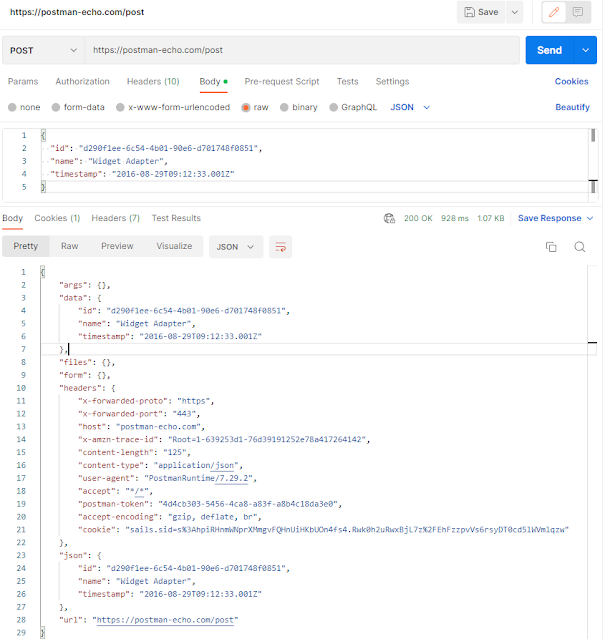

Cross-Origin Resource Sharing is a security feature supported by modern browsers to enable applications hosted on a particular domain to access resources hosted on different domains. In this case, the resource that needs to be shared is an API via a web request. The way a browser enforces this process is by creating a preflight request to the server before actually sending the request. This is the process to check if the client application is authorized to make the actual request.

When the app is ready to make a request to the API, the browser sends an OPTIONS request to the API, a preflight request. If the cross-origin server has the correct CORS policy, an HTTP 200 status is returned. This authorizes the browser to send the actual request with all the request information and headers.

For the cross-origin server to be configured properly, the policies need to include the client origin or domain, the web methods and headers that should be allowed. This is very important because it protects the API from possible exploits from unauthorized domains. It also controls the operation that can be called. For example, a GET request may be allowed, but a POST request may not be allowed. This level of configuration helps with the authorization of certain operations, like read only, at the domain level. Now that we understand the importance of CORS, let's look at how we can support this scenario using an API gateway.

What is Azure API Management?

The Azure API Management service is a reverse proxy gateway that manages the integration of APIs by providing the routing services, security and other infrastructure and governance resources. It provides the management of cross-cutting concerns like security policies, routing, document (XML, JSON) transformation, logging in addition to other features. An APIM can host multiple API definitions which are accessible via an API suffix or route information. Each API definition can have multiple operations or web methods. As an example, our service has a telemetry and audit API. Each of those APIs have two operations to GET and POST information.

- api.services.com/telemetry

- GET, POST operations

- api.services.com/audit

- GET or POST operations

For our purpose, we can use the security features of this gateway to enable the access of cross-origin client applications to internal APIs that are only accessible via the gateway. This can be done by adding policies at the API level or to each API operation. Let's take a look at what that looks like when we are using the Azure Portal.

We can add the policy for all the operations, or we can add it to each operation. Usually, when we can create an API, all the operations should have the same policies. For our case, we apply the policy at the API level, so all the operations are covered under the same policy. But exactly, what does this policy looks like? Let's review our policy and understand what is really doing.

For our integration to work properly, we need to configure the following information:

- Allow the app.ozkary.com domain to call the API by using the allowed-origins policy. This shows as the access-control-allow-origin header on the request response.

- Allow the OPTIONS, GET AND POST HTTP methods by using the allowed-methods policy. This shows as the access-control-allow-methods header on the request response.

- Allow the headers Content-Type and x-requested-by by using the allowed-headers policy. This shows as the access-control-allow-headers header on the request response.

Note: The request response can be viewed using the browser development tools, network tab.

This policy governs the cross-origin apps and what operations the client can use. As an example, if the client application attends to send a PUT or DELETE operation, it will be blocked because those methods are not defined in the CORS policy. It is also important to note, that we could use a wildcard character (*) for each policy, but this essentially indicates that any cross-origin app can make any operation call. Therefore, there is really no security, which is not a recommended approach. The use of wildcards should be done only during the development effort, but it should not be used in production environments.

After Adding the Policy CORS Does not Work

In some cases, even when the policy is configured correctly, we may notice that the policy is not taking effect, and the API request is not allowed. When this is the case, we should look at the policy configuration in all the levels. In Azure APIM, there are three levels of configuration:

- All APIs - One policy to all the API definitions

- All Operations - All the operations under one API definition

- Each Operation - One specific operation

We may think that the configuration at the operation level should take precedence, but this is not the case if there is a <base/> entry, as this indicates to use the parent configuration and apply it to this policy. To help prevent problems like this, make sure to review the high level configurations, and if necessary remove the <base/> entry at the operation level.

Conclusion

A CORS policy is a very important security configuration when dealing with applications and APIs that are hosted in different domains. As resources are shared across domain, it is important to clearly define what cross-origin clients can access the API. When using a cloud platform to protect an API, we can use an API gateway to help us manage the cross-cutting concerns like a CORS policy. This helps us minimizes risks and provides enterprise quality infrastructure.

.png)