Overview

The discovery process involves identifying the problem, analyzing data sources, defining project requirements, establishing the project scope, and designing an effective architecture to address the identified challenges.

In this session, we will delve into the essential building blocks of data engineering, placing a spotlight on the discovery process. From framing the problem statement to navigating the intricacies of exploratory data analysis (EDA) using Python, VSCode, Jupyter Notebooks, and GitHub, you'll gain a solid understanding of the fundamental aspects that drive effective data engineering projects.

- Follow this GitHub repo during the presentation: (Give it a star)

- Read more information on my blog at:

👉 https://www.ozkary.com/2023/03/data-engineering-process-fundamentals.html

YouTube Video

Video Agenda

Introduction:

Unveiling the importance of the discovery process in data engineering.

Setting the stage with a real-world problem statement that will guide our exploration.

Setting the Stage:

Downloading and comprehending sample data to kickstart our discovery journey.

Configuring the development environment with VSCode and Jupyter Notebooks.

Exploratory Data Analysis (EDA):

Delving deep into EDA techniques with a focus on the discovery phase.

Demonstrating practical approaches using Python to uncover insights within the data.

Code-Centric Approach:

Advocating the significance of a code-centric approach during the discovery process.

Showcasing how a code-centric mindset enhances collaboration, repeatability, and efficiency.

Version Control with GitHub:

Integrating GitHub seamlessly into our workflow for version control and collaboration.

Managing changes effectively to ensure a streamlined data engineering discovery process.

Real-World Application:

Applying insights gained from EDA to address the initial problem statement.

Discussing practical solutions and strategies derived from the discovery process.

Key Takeaways:

Mastery of the foundational aspects of data engineering.

Hands-on experience with EDA techniques, emphasizing the discovery phase.

Appreciation for the value of a code-centric approach in the data engineering discovery process.

Some of the technologies that we will be covering:

- Python

- Data Analysis and Visualization

- Jupyter Notebook

- Visual Studio Code

Presentation

Data Engineering Overview

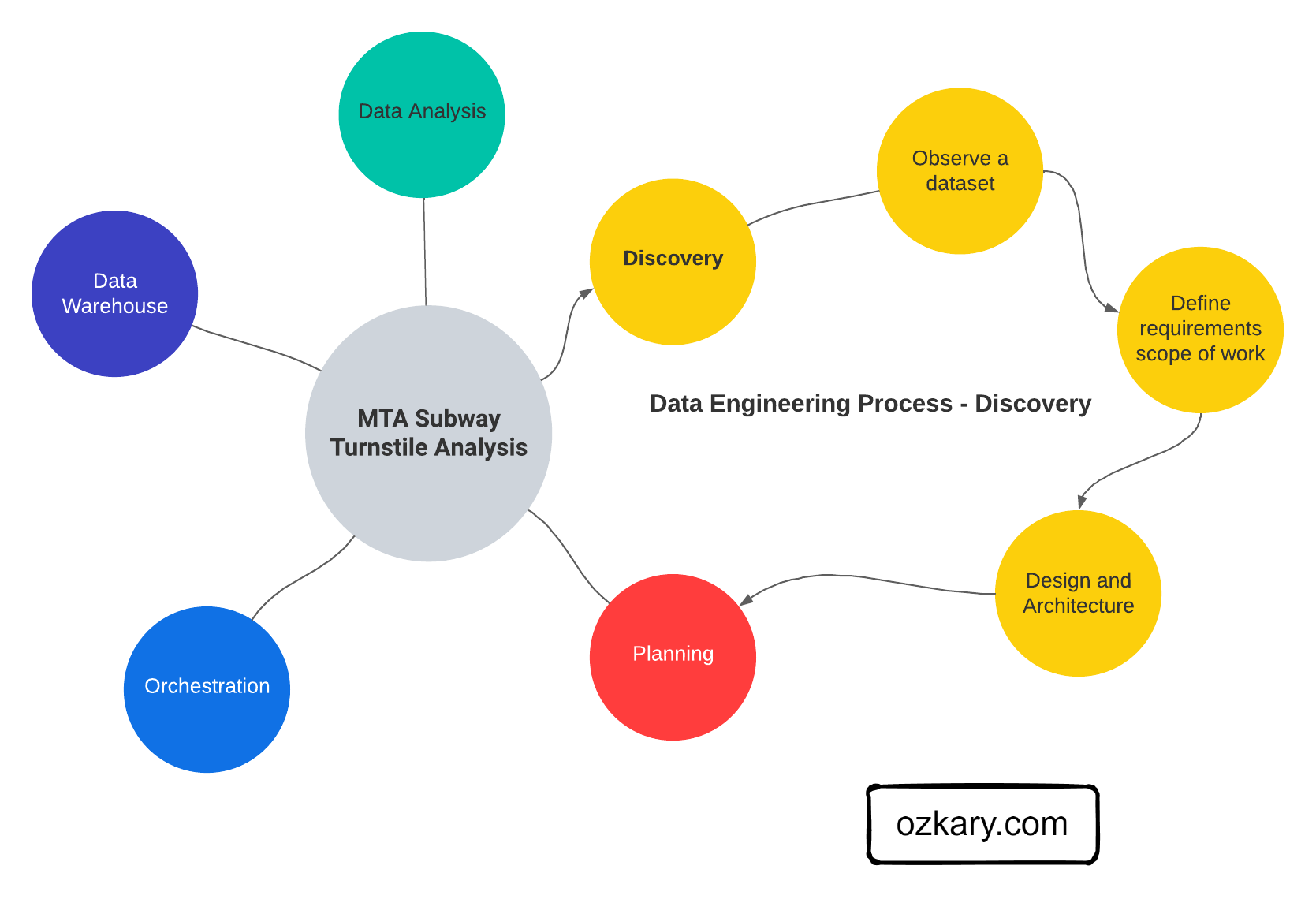

A Data Engineering Process involves executing steps to understand the problem, scope, design, and architecture for creating a solution. This enables ongoing big data analysis using analytical and visualization tools.

Topics

- Importance of the Discovery Process

- Setting the Stage - Technologies

- Exploratory Data Analysis (EDA)

- Code-Centric Approach

- Version Control

- Real-World Use Case

Follow this project: Give a star

Importance of the Discovery Process

The discovery process involves identifying the problem, analyzing data sources, defining project requirements, establishing the project scope, and designing an effective architecture to address the identified challenges.

- Clearly document the problem statement to understand the challenges the project aims to address.

- Make observations about the data, its structure, and sources during the discovery process.

- Define project requirements based on the observations, enabling the team to understand the scope and goals.

- Clearly outline the scope of the project, ensuring a focused and well-defined set of objectives.

- Use insights from the discovery phase to inform the design of the solution, including data architecture.

- Develop a robust project architecture that aligns with the defined requirements and scope.

Setting the Stage - Technologies

To set the stage, we need to identify and select the tools that can facilitate the analysis and documentation of the data. Here are key technologies that play a crucial role in this stage:

- Python: A versatile programming language with rich libraries for data manipulation, analysis, and scripting.

Use Cases: Data download, cleaning, exploration, and scripting for automation.

- Jupyter Notebooks: An interactive tool for creating and sharing documents containing live code, visualizations, and narrative text.

Use Cases: Exploratory data analysis, documentation, and code collaboration.

- Visual Studio Code: A lightweight, extensible code editor with powerful features for source code editing and debugging.

Use Cases: Writing and debugging code, integrating with version control systems like GitHub.

- SQL (Structured Query Language): A domain-specific language for managing and manipulating relational databases.

Use Cases: Querying databases, data extraction, and transformation.

Exploratory Data Analysis (EDA)

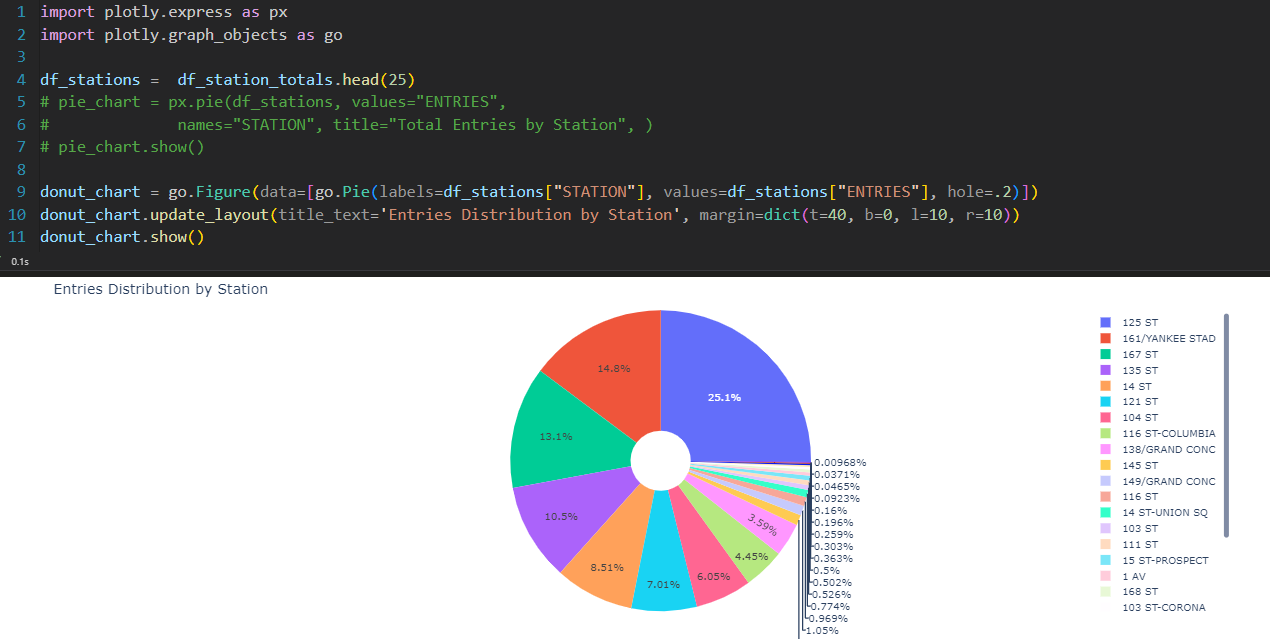

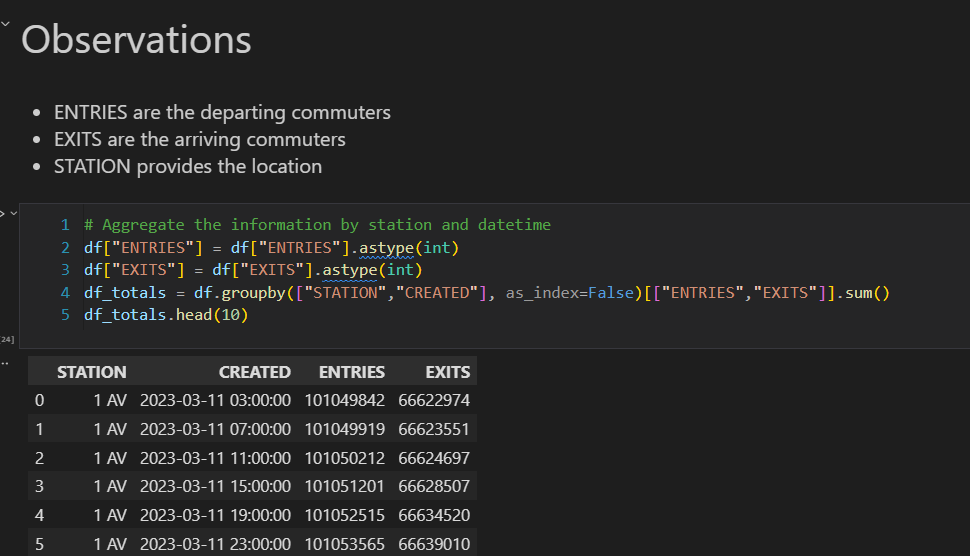

EDA is our go-to method for downloading, analyzing, understanding and documenting the intricacies of the datasets. It's like peeling back the layers of information to reveal the stories hidden within the data. Here's what EDA is all about:

EDA is the process of analyzing data to identify patterns, relationships, and anomalies, guiding the project's direction.

Python and Jupyter Notebook collaboratively empower us to download, describe, and transform data through live queries.

Insights gained from EDA set the foundation for informed decision-making in subsequent data engineering steps.

Code written on Jupyter Notebook can be exported and used as the starting point for components for the data pipeline and transformation services.

Code-Centric Approach

A code-centric approach, using programming languages and tools in EDA, helps us understand the coding methodology for building data structures, defining schemas, and establishing relationships. This robust understanding seamlessly guides project implementation.

Code delves deep into data intricacies, revealing integration and transformation challenges often unclear with visual tools.

Using code taps into Pandas and Numpy libraries, empowering robust manipulation of data frames, establishment of loading schemas, and addressing transformation needs.

Code-centricity enables sophisticated analyses, covering aggregation, distribution, and in-depth examinations of the data.

While visual tools have their merits, a code-centric approach excels in hands-on, detailed data exploration, uncovering subtle nuances and potential challenges.

Version Control

Using a tool like GitHub is essential for effective version control and collaboration in our discovery process. GitHub enables us to track our exploratory code and Jupyter Notebooks, fostering collaboration, documentation, and comprehensive project management. Here's how GitHub enhances our process:

Centralized Tracking: GitHub centralizes tracking and managing our exploratory code and Jupyter Notebooks, ensuring a transparent and organized record of our data exploration.

Sharing: Easily share code and Notebooks with team members on GitHub, fostering seamless collaboration and knowledge sharing.

Documentation: GitHub supports Markdown, enabling comprehensive documentation of processes, findings, and insights within the same repository.

Project Management: GitHub acts as a project management hub, facilitating CI/CD pipeline integration for smooth and automated delivery of data engineering projects.

Summary

The data engineering discovery process involves defining the problem statement, gathering requirements, and determining the scope of work. It also includes a data analysis exercise utilizing Python and Jupyter Notebooks or other tools to extract valuable insights from the data. These steps collectively lay the foundation for successful data engineering endeavors.

Thanks for reading.

Send question or comment at Twitter @ozkary

👍 Originally published by ozkary.com

0 comments :

Post a Comment

What do you think?